Web scraping in Java with Jsoup, Part 2 (How-to)

Web scraping refers to programmatically downloading a page and traversing its DOM to extract the data you are interested in. I wrote a parser class in Java to perform the web scraping for my blog analyzer project. In Part 1 of this how-to I explained how I set up the calling mechanism for executing the parser against blog URLs. Here, I explain the parser class itself.

But before getting into the code, it is important to take note of the HTML structure of the document that will be parsed. The pages of The Dish are quite heavy–full of menus and javascript and other stuff, but the area of interest is the set of blog posts themselves. This example shows the HTML structure of each blog post on The Dish:

Read more

Web scraping in Java with Jsoup, Part 1

In order to obtain the data to feed into my blog analyzer, content must be parsed from the pages of the blog itself. This is called “web scraping”. Jsoup will be used to parse the pages, and because this is a Spring project, Spring scheduling will be used to invoke the parser.

The following classes were created:

- BlogRequest – invokes the parser on a given blog URL, passes parsed content to service layer

- BlogRequestQueue – queues up and executes blog requests

- BlogParser – interface with parseURL method

- DishBlogParser – implements BlogParser, used to parse the blog The Dish

Each of these (aside from the interface) is configured as a Spring-managed bean. The code for BlogRequest:

Read more

A Blog Analyzer Project

In the coming days, I will be writing about a project I’m working on which will perform analysis on Andrew Sullivan’s The Dish blog, which is one of the most popular blogs on American politics. The intent of the project, which will utilize such technologies as Spring 3, JSP/JSTL, JDBC, PostgreSQL, and jQuery/Ajax, is to web scrape the blog, extract key data elements, and reorganize and present this data in new and interesting ways. Additionally, I will create a bookmarklet that will add value to the blog site itself.

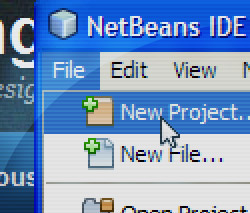

Development tools used include Netbeans 7.0, Firefox with Firebug, and the always handy psql Postgres command line tool.

There are many interesting technical challenges involved, and I will write about them on this blog. Additionally, there is the question of copyright law, which is an unavoidable concern when building off of content from a third party. Copyright law was not meant to stifle innovation, though, provided certain criteria are met: the content originator must not be harmed in the marketplace, repurposed content must be transformed into a novel work, and small portions must be used. I believe my project fits these criteria.